In statistics and probability theory, independence is a power condition for two random variables. For two random variables X and Y, they are independent if knowing X will not change the probability distribution of Y (and vice versa).

A related but distinct concept is correlation, which is a quantity between -1 and 1 that quantifies linear relationship between two random variables. Independence implies 0 correlation BUT not the other way around. Here’s an epic example for 0 correlation but no independence. Assume X might be -1, 0 ,or 1 with equal (1/3) probability. Let Y=X^2. Then it is easy to see that X and Y has 0 correlation but they are dependent to each other. One may interpret correlation as the linear dependence between two random variables. Thus, 0 correlation only shows that there is no the linear dependence but we might have higher order dependence. In the previous example, X and Y are in the quadratic relationship so that correlation–the linear dependence–cannot capture it.

Although independence is a stronger statement than correlation, they are equivalent if two random variables are jointly Gaussian. Namely, for two normal random variables, 0 correlation implies independence. This is an appealing feature for Gaussian and is one of the main reasons some researchers would like to make Gaussian assumption.

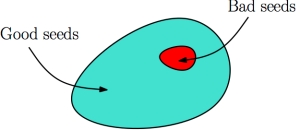

A more mathematical description for independence is as follows. Let F(x) and F(y) denotes the marginal (cumulative) distribution of random variables X, Y, respectively. And let F(x,y) denote the joint distribution. If X and Y are independent, then F(x,y) = F(x)F(y). Namely, the joint distribution is the product of marginals. If X and Y has densities p(x) and p(y), then independence is equivalent to say p(x,y) = p(x)p(y) (again, joint density equals to the product of marginals).

The independence is a power feature in probability and statistics. Some people even claim that independence is the key feature that makes “probability theory” a distinct field from measure theory. Indeed, independence makes it possible for the concentration of measure for a function of many random variables (concentration of measure: probability concentrates around a certain value; just think of law of large number).

Now let’s talk about something about statistics–test for independence. Given pairs of observations, say (X_1, Y_1), … ,(X_n, Y_n) that are random sample (IID). To goal is to test if the pair (X, Y) are independent to each other.

When two random variables are discrete, a well-known method is the “Pearson’s chi-squared test for independence”

(https://en.wikipedia.org/wiki/Pearson%27s_chi-squared_test#Test_of_independence). Pearson’s method is to compare the theoretical behavior of joint distribution under independence case (marginals are still based on sample) to the real observed joint distribution.

When two variables are continuous, things are more complicated. However, if we assume the joint distribution is Gaussian, we can simply test the correlation. If the correlation is non-zero, then due to the powerful feature of Gaussian–independence is equivalent to 0 correlation–we can claim they are dependent.

If we do not want to assume Gaussian, there are many nonparametric tests for independence. Here I list four famous approaches.

- Hoeffding’s Test. Hoeffding, Wassily. “A non-parametric test of independence.” The Annals of Mathematical Statistics (1948): 546-557

(http://projecteuclid.org/euclid.aoms/1177730150). This test basically use empirical cumulative distribution (EDF) of F(x,y) versus products of marginal EDF and then take supreme over both x and y. This is like a KS-test for independence. - Kernel Test. Rosenblatt, Murray. “A quadratic measure of deviation of two-dimensional density estimates and a test of independence.” The Annals of Statistics (1975): 1-14 (https://projecteuclid.org/euclid.aos/1176342996). Kernel test is very similar to Hoeffding’s test but we now use the kernel density estimator to estimate both joint density and marginals and then compare joint KDE to the product of marginal KDEs.

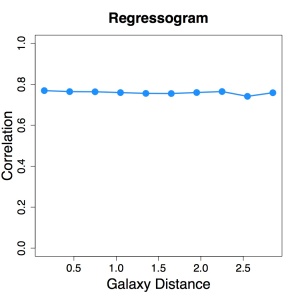

- Energy Test (distance covariance/correlation). An introduction is in [https://en.wikipedia.org/wiki/Distance_correlation]. Székely, Gábor J., Maria L. Rizzo, and Nail K. Bakirov. “Measuring and testing dependence by correlation of distances.” The Annals of Statistics 35, no. 6 (2007): 2769-2794 (http://projecteuclid.org/euclid.aos/1201012979). They derive a quantity called distance correlation and then show that the scaled version of this distance correlation converges to some finite value when two random variables are independent and diverges under dependence.

- RKHS Test (RKHS: Reproducing Kernel Hilbert Space). Gretton, Arthur, and László Györfi. “Consistent nonparametric tests of independence.” The Journal of Machine Learning Research 11 (2010): 1391-1423

(http://www.jmlr.org/papers/volume11/gretton10a/gretton10a.pdf). This test uses the fact that independence implies that under all functional transformations for the two random variables, they are still uncorrelated (0 correlation). Then they use the reproducing property for the RKHS and kernel trick (note: this kernel is not the same as the kernel in kernel test) to construct a test for independence.

As a concluding remark, I would like to point out that there are some ways to “quantify” dependence. For instance, alpha-mixing, beta-mixing are some definitions for dependence among sequence of random variables that are commonly used in Time-Series. Wikipedia has a short introduction about these dependence measure [https://en.wikipedia.org/wiki/Mixing_(mathematics)].